- nenna.ai news

- Posts

- 📬 nenna.ai monthly newsletter - July

📬 nenna.ai monthly newsletter - July

Hello nenna.ai fellows

Welcome to our July (holiday season) edition! This month's newsletter includes updates from Google, Figma, Hamburg DPA, and Nenna. Scroll down for more 👇

AND Thank you for being a part of our community! 💜

TODAY´S MENU

Google

Jobs @ nenna

Hamburg DPA

Nenna Policies

Data Security: Figma

What else was important

Google

Google Gemini sparks privacy concerns over unauthorized document access

Summary: An investigation by privacy advocate Kevin Bankston has raised concerns that Google’s Gemini AI may be accessing private documents on Google Drive without explicit user permission. Google disputes these claims, asserting that users must proactively enable Gemini and that their content is used in a privacy-preserving manner.

Details:

Automatic access to documents: Bankston reported that Gemini summarized his private tax return document without his request, suggesting Gemini might be accessing private Google Drive documents automatically.

Confusing privacy settings: Bankston found the privacy settings for Gemini difficult to locate and noted that Gemini summaries were disabled in his settings, raising questions about user control and transparency.

Potential trigger mechanism: The issue appears to be triggered by pressing the Gemini button on a document type, which then automatically enables Gemini for all future documents of the same type in Google Drive.

Why is it important: This situation underscores the critical importance of clear and accessible privacy settings in AI integrations. Users need transparent control over their data to prevent unauthorized access and ensure their privacy and data security are maintained

Jobs @ Nenna

We are currently looking for awesome people to join us!

Jobs:

Please, If you know someone who is looking for a job with purpose and wants to help push a young startup forward, reach out to [email protected]

Hamburg DPA

Navigating GDPR in LLMs: A discussion paper from Hamburg DPA

Summary: The Hamburg Commissioner for Data Protection provides a detailed analysis of how the GDPR applies to Large Language Models (LLMs). The paper clarifies that LLMs do not inherently store personal data but emphasize the need for compliance with data protection laws during AI system training and deployment.

Details:

Storage and processing: LLMs do not store personal data as they work with abstracted tokens and embeddings, meaning their training data cannot be reconstructed.

Data subject rights: While LLMs themselves are not directly subject to GDPR data subject rights, the inputs and outputs of AI systems using LLMs must comply with these rights.

Training compliance: The use of personal data in training LLMs must comply with GDPR, ensuring data subject rights are upheld throughout the process.

Additional note: A recent experiment disproves the Hamburg Data Protection Authority's claim that LLMs do not contain personal data by demonstrating that a trained LLM can accurately retrieve memorized training data, behaving like a database and thus proving it contains PII.

Why is it important: Understanding the interaction between GDPR and LLMs is crucial for companies and public authorities to navigate the complexities of data protection in AI. This ensures that AI deployments are legally compliant, protecting individual privacy while enabling technological advancement. From Nenna's perspective, you need to focus on the Input & Output of LLMs to stay compliant with GDPR - we can help you with that!

Nenna

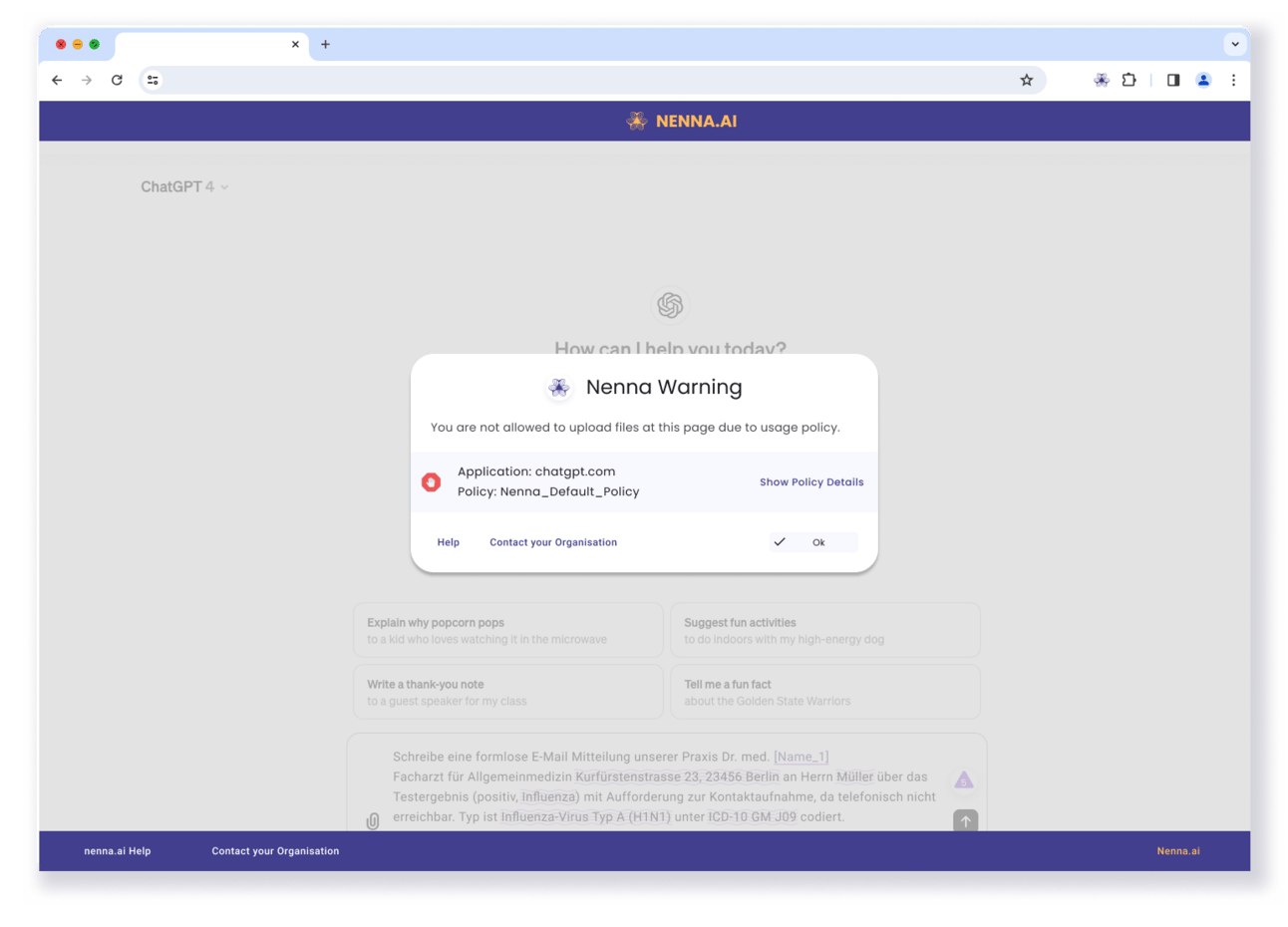

Manage policies to protect your enterprise with Nenna.ai

New month, new feature! As you know, we currently working hard to launch Nenna in Fall this year. With our dedication to secure AI, we also try to make AI compliant for enterprises. Therefor, we will integrate policy management in Nenna, to manage these for your enterprise and allow employees using certain services, websites or documents with AI. If you want to learn more and see this in detail, register for our development preview!

Join us at nenna.ai and be a part of the future of secure AI!! 🙌

Data Security

Figma to begin AI training on private customer data

Summary: Figma announced it will start training proprietary AI models on private customer data starting August 15th, 2024, while still allowing admins to control participation. Previously, Figma used third-party models without private data for AI features. The company outlines how different user tiers will be affected and how they can manage their settings.

Why is it important: Understanding Companies AI training policies is crucial for users to manage their data privacy and security effectively. The ability to control whether their data is used for AI training helps ensure that sensitive information is handled appropriately, while still benefiting from advanced AI features. Please be aware of Terms and Conditions of services who integrate AI. Keep in mind, they all want to train their own models to become independent of large LLM provider like OpenAI and they need YOUR data.

NEWS

What else was important

YouTube: An investigation by Proof News revealed that AI companies, including Apple, Nvidia, and Anthropic, have used subtitles from 173,536 YouTube videos to train their AI models without creators' consent, despite YouTube’s rules against unauthorized harvesting. While AI companies argue their actions fall under fair use and emphasize the public availability of the data, creators demand compensation and regulation for the use of their content.

SAP: Wiz Research uncovered multiple vulnerabilities in SAP AI Core, exposing significant risks to customer data and cloud environments. By exploiting these vulnerabilities, attackers could gain access to private files, cloud credentials, and internal AI artifacts, compromising the security and integrity of SAP’s AI services. SAP has since fixed these issues, emphasizing the importance of robust isolation and security measures in AI infrastructure.

AI Act: The EU Artificial Intelligence (AI) Act was published in the Official Journal of the European Union on July 12, 2024, as “Regulation (EU) 2024/1689 of the European Parliament and of the Council of 13 June 2024 laying down harmonized rules on artificial intelligence.”